When deploying VMware Cloud Foundation (VCF), we deploy an appliance (ova) called Cloud Builder. This appliance allow us to load the parameter file an automate the deployment of the entire infrastructure, allowing us to go from spreadsheet to full SDDC.

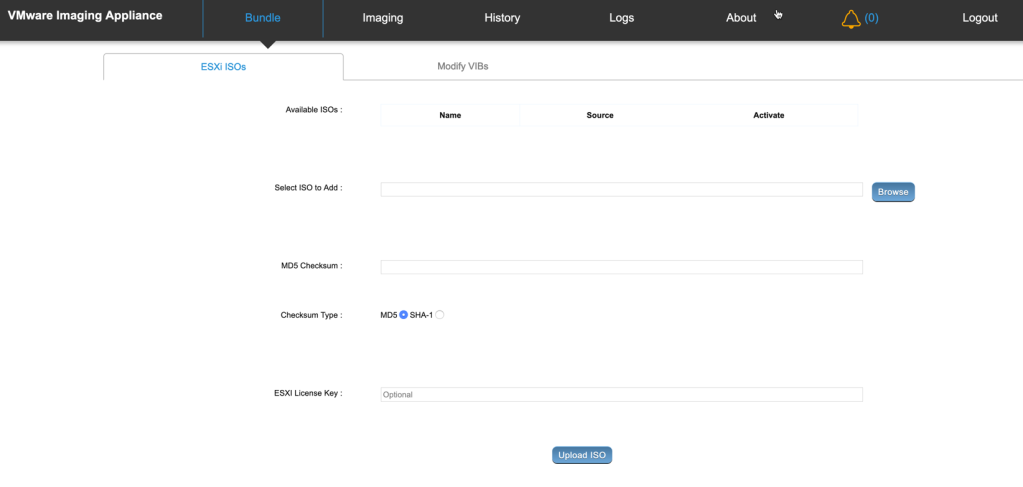

Cloud Builder also includes the VMware Imaging Appliance that you can leverage to build your ESXi hosts. Just navigate to https:// Cloud_Builder_VM_IP:8445/via and login with the admin credentials you set during Cloud Builder ova deployment. There are some documents out there stating to use root and EvoSddc!2016 as password but that most likely not work, at least not on newer versions available. More on VIA here.

Anyway, once the bringup of VCF has been completed, Cloud Builder will most likely not be used again, you can safely remove it. However, if you plan to deploy other VCF environments or, like me, is constantly deploying VCF on lab environments you can “refresh/reuse” Cloud Builder via a couple of options… See what I did there?!? via … like the imaging appliance.. never mind.

So the first option is to simply take a snapshot of Cloud Builder after the initial deployment of the ova and BEFORE you login to it and start importing the parameter file. Once you are done using Cloud Builder, you can simply revert the snapshot back and you can reuse the appliance again.

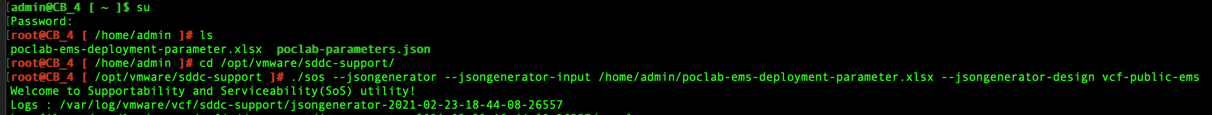

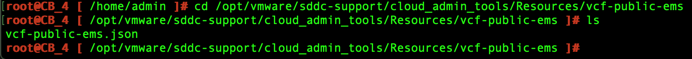

If you prefer not to take the snapshot or forgot to do it at the beginning, no worries. We can run a command within Cloud builder to remove previous entries and REUSE Cloud Builder.

- su –

- psql –host=localhost -U postgres -d bringup

- delete from execution;

- delete from “Resource”;

- \q

Disclaimer: this is most likely not officially supported and hacking at the db is done at your own risk. Since this is Cloud Builder the risk is low and you can always deploy a new appliance if you mess it up, but still be careful.

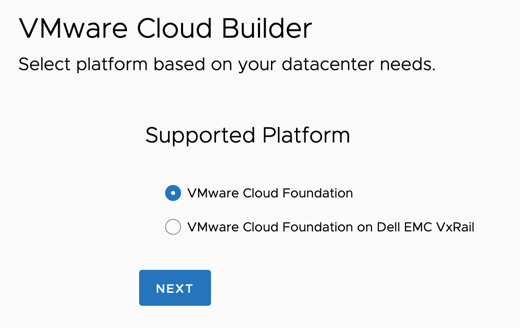

That’s it, this will remove the previous entries and next time you log in to Cloud Builder it will feel like you just deployed the appliance. Meaning that you have to go through the initial prompts, such as accepting the EULA, selecting the platform to be used, etc.